The big question on many people's minds is

"Should I get a V5 or GeForce2?" The answer depends on a

few things. If you want raw speed, the GeForce 2 may be the way to

go, but if you prefer stability, anti-aliasing, and cinematic effects, the

V5 may be the right card for you. If you are a real speed nut, your

choice is between the GeForce 2 now, or the V5 6000 in a month or

two. But with speed, comes cost. The GeForce 2 cards range

from $300 to over $400, and the V5 6000 will debut at around $600. That's too

much money for many people.

The Hardware:

The first thing you will notice about the V5 5500 card is it's size.

It's huge. I haven't seen a card this big since the ill-fated

Obsidian board from Quantum 3D. If you have a small, cramped case,

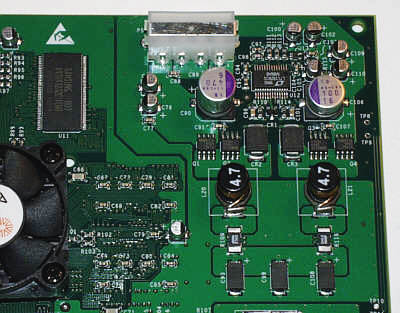

it may be impossible to get a V5 5500 card installed. The next thing

you will notice is that this is the first consumer level video card that

requires a power connector. In fact, the large size of the board may

be due to the power control circuitry shown here.

The board contains 64MBs of high quality

Samsung SGRAM, with 32MBs dedicated to each of the 2 processors. You also

get a drivers disk and a small installation manual. In addition, you

get

a mail-in coupon that entitles you to get a copy of WinDVD, but I really

wish they would include a WinDVD CD in every box, avoiding the need to

wait for the mail. Finally, the box contains a note apologizing for

the 2 week delay in shipping, and a complimentary Voodoo5 mouse pad that

looks cool.

The Setup: I

tested the V5 5500 card on 3 setups.

1) The first was an Abit KA7 motherboard with an Athlon 550 and

a golden fingers overclock card. The setup was overclocked to 700MHz with

a core voltage of 1.7v. The test setup included 128 MB of

NEC PC-133 SDRAM (CAS3).

2) The second setup was a Celeron 400MHz, overclocked to 75MHz x 6

= 450MHz on an Abit VT6X4 VIA-KX-133 motherboard with 128MB PC-133 SDRAM.

3) The third setup was a PIII 650E and Gigabyte FC-PGA adapter on

the VT6X4 motherboard, again with 128MB of PC-133 SDRAM. This setup was

overclocked to 124MHz x 6.5 = 806MHz.

Overclocking the V5:

I could not find any method of overclocking the V5 card in the drivers

that come with it. But programs like PowerStrip by Entech

give you full control over your video cards.

Using the overclocking slider in PowerStrip, I

tried increasing the memory/core clock from it's normal setting of 166MHz

up to 175MHz, 180MHz, 185MHz, and 190MHz. The highest level I deemed

safe was 185MHz. At 190MHz, 3D Mark 2000 would occasionally

hang. The performance boost in 3D Mark 2000 at 185MHz was not very

significant, only about 4%. I did most of the following benchmarks with

the V5 card at 180MHz.

The other method of overclocking the V5

card is on the bus. We tested the V5 on several platforms, including

a PIII 650E overclocked to 124MHz on the FSB. This translates to an

AGP slot frequency of about 82MHz, which is notably higher than the

default speed of 66MHz. We had no troubles whatsoever with the V5

5500 card at this AGP speed. Even when overclocking the V5 card to

180MHz with PowerStrip, and 82MHz on the AGP bus simultaneously, the

system never hung even once during all the testing sessions.

Considering it took days to complete the testing, that's pretty good

stability.

Driver Stuff:

Variables I used included VSYNC off, bilinear filtering, and mipmap

dithering on. I used the drivers included on the V5 disk. There was

some controversy over what drivers were available on the V5 CD. I

was over at 3dfx's message boards over the last few days, and there was a

big buzz about the default drivers not being the optimal ones. This

turned out not to be true. For those people who observed an improvement

with the other driver set, it may have just been that your original driver

installation did not work correctly. In any case, there are two

drivers on the CD, the default one appears to be a unified V3/V4/V5 driver

set. It lists your card as a "Voodoo Series" card in the

device manager. In a Win9x subdirectory, there is a V5 standalone

driver that reports the card as a Voodoo5 in the device manager, but it

did not improve our performance in 3D Mark 2000 by even one 3D Mark.

All-in-all, the drivers for the V5 5500 are

very good. The things that were missing included the Level of Detail (LOD)

slider that would help sharpen anti-aliased images, and a built-in

overclock slider. Both should be added to future driver sets.

I noticed a few glitches in the V5

drivers. One was the "darkened menus" phenomenon, where a

video resolution switch in Q3 sometimes caused the menu to go very dark,

until a game was started. One time, when switching resolutions in

the middle of a game, the game went very dark until I left the arena and

started a new game. This may be an Open GL driver glitch, and should

be fixed quickly. The other driver glitch was again associated with

resolution switching while in a game. In Unreal Tournament,

sometimes a resolution switch would lead to very thin, shimmering lines

along the top and left side of the screen.

I should note that these minor driver

glitches are insignificant relative to the problems you can encounter when

installing GeForce drivers.

Benchmarks:

Rather than doing oodles of benchmarks, I decided to keep it simple.

I did benchmarks in 3D Mark 2000 for Direct 3D performance, Quake III for

OpenGL, and Unreal Tournament for Glide.

3DMark 2000 (Direct 3D):

Celeron:

The chart below is a simple demonstration of why Celeron-1

owners do not want a V5 card. The graph shows how many "3D

Marks" the V5 was able to deliver on several systems. Consider the

2500 3D Mark range to be the minimum you want to see on your system.

This puts Celeron-1 systems well below the minimum hardware requirement

(results for 16 bit textures).

Voodoo5 card 3D Mark Benchmarks on 3 systems

The next chart shows how much better a GeForce1

card works on a Celeron running at 450MHz, even in 32 bit color.

Keep in mind that you want your system above 2500 3D Marks at a given

setting.

Voodoo5 vs. GeForce1 3D Mark 2000 Benchmarks

on a Celeron 450MHz system

The bottom line here is that you need at least a

PIII or an Athlon to run a V5 5500 card at acceptable frame rates.

If you have a Celeron-1 system, you will do better to get a low-cost

GeForce-1 card.

Athlon 700:

The next chart is from an Athlon 700 running 3D Mark 2000. For these

particular tests, the Voodoo5 was running at it's default speed of 166MHz.

The front side bus speed for the setup with the V5 card was 100MHz,

putting the AGP slot at it's standard 66MHz value. At these graphics

settings it looks like 32 bit textures are out of the question if you want

4X FSAA. If 2X FSAA is acceptable to you, then 800x600x32 will give

you good Direct 3D performance with an Athlon 700.

V5 5500 3D Mark 2000 benchmarks: Athlon 700MHz

Pentium III 800:

The chart below shows 3D Mark 2000 results from the PIII 800, with a front

side bus setting of 124MHz. This means the V5 card was running at

about 82MHz in the AGP slot, up 16MHz from it's default value. And

yet, the numbers are very similar to those observed with the Athlon 700.

V5 5500 3D Mark 2000 benchmarks: PIII 800MHz

Quake3 Arena

(OpenGL):

Testing under Quake III was done on the PIII 800 system

(124MHz front side bus setting). The Voodoo5 card was also

overclocked from 166 to 180MHz with PowerStrip. Geometric Detail and

Texture Detail were set to maximum. I used 16 bit textures to keep the

frame rates high. All Q3 benchmarks were done with the sound on, to

simulate actual gaming conditions. To show how many frames per second are

being rendered in Q3, hit the tilde key(~) and type in:

/cg_drawfps 1

and hit Enter. I did both timedemo

benchmarks, and also collected fps counts in specific locations in an

actual deathmatch game. The chart below shows Q3 frames per second (fps)

while looking at specific locations in the Q3DM1 map during an actual

single player game (level 1). The three locations I aimed the crosshair at

were: 1) looking into the big tongue in Q3DM1 from the far wall, 2)

looking at the center arch in the courtyard from the far wall, and 3),

looking out of the big tongue, pointing at the QIII logo on the far wall.

This is where I got some of the lowest fps readings on this map.

V5 5500 benchmarks in Quake III Arena: PIII 800MHz

For some reason, even with VSYNC disabled, and

com_maxfps set to 150, there was a 90 fps limit (my monitor had a refresh

rate of 75Hz). But the graph still shows what is most important, how many

graphics features can you turn on before frame rates become

unacceptable. Obviously, with graphics settings this high, the

1024x768 resolution at 4x FSAA is not going to offer a smooth gaming

experience.

Timedemo stats using demo001 were as follows:

No FSAA 2x

FSAA 4x FSAA

640x480:

79.8fps

79.8fps

63.2fps

800x600:

70.7fps

74.7fps

42.5fps

1024x768:

77.8fps

54.9fps 27.0fps

You can see there is a big difference with

4X FSAA enabled. But still, at 800x600 and 4x FSAA, I never saw frame rates

drop below about 30. That would change with a larger map and many

players. So if you want to use 4X FSAA in Quake 3, you will need to

limit the resolution.

So how did the image look in Q3 with

anti-aliasing enabled? Excellent, even at 640 x 480. In fact I can

say that with high detail on, and 4X FSAA, the image in Q3 was just about

the best I've ever seen in a game. I think that the image with 4X FSAA and

640 x 480 would look very good on monitors up to 19". People

with larger monitors will want to go to 800 x 600. In my opinion, the game

was very playable, and appeared very smooth, at 800x600 with 4x FSAA

enabled.

Unreal Tournament

(Glide):

Benchmarking with Unreal Tournament was also done on the

PIII 800 system (124MHz front side bus setting). Unreal Tournament

does not work particularly well as a benchmark, but nonetheless, will give

you an idea of what kind of frame rates you can expect on a high-end

system with a V5 5500. For these benchmarks, I also wanted a

real-world situation, so I played the first level (Hyperblast) all the way

through with the different settings.

V5 5500 benchmarks in Unreal Tournament:

Pentium III 800MHz (avg. fps in a whole game)

Overall, frame rates were quite good, and

game play was smooth at 800x600 (16bit) with 4x FSAA enabled.

Remember, the above numbers give the average fps throughout an entire

game. The

images were exceptional. The only problem with anti-aliasing is that

small text can become blurred. This was particularly evident in Unreal

Tournament's menu system. Such situations are where level of detail

(LOD) adjustments come in handy. Playing with LOD can really improve

the way small text looks with anti-aliasing on. 3dfx says this feature

will be enabled with an "LOD slider" in the next release of

drivers.

Summary:

NVidia fans will probably say the V5 5500 is too little too late, but in my

opinion, the 5500 has some really nice advantages over GeForce 2

cards. First, of course, is the hardware assisted full scene

anti-aliasing, which works in all games. GeForce and GeForce 2 cards

have software anti-aliasing built into the latest drivers which were just

released,

but it does not work in all situations yet, and does not look as good as 3dfx's

solution.

Second, while some people have not reported problems

getting GeForce 1 and 2 cards to work, many people have had endless

driver, conflict, motherboard, and/or power supply problems. We have had

more trouble with GeForce cards than any other video card line in our

entire 3 year history. We can get them to work in almost every

situation... eventually, sometimes after much effort. But more often

than not, even after getting things to work, there are problems with

flashing textures, missing menus, etc. On the other hand, the V5

drivers seem absolutely solid in comparison. For most people, the V5

will be a plug and play experience.

Third, the T-buffer

cinematic effects will be a nice addition when games are written to make

use of them. Both motion blur and depth of field blur can add a very nice

touch to games if implemented properly. The improved shadow and reflection

effects will also be welcome. We all like special effects. How long

before these effects will appear in new games is anyone's guess, but 3dfx

says they are working with Microsoft to implement the T-buffer cinematic

effects in Direct X 8.0, which is due out this Fall.

Important Note to

Celeron-1 owners: I do not consider Voodoo 5 cards to be an

option for Celeron-1 owners. If you can not upgrade to a PIII, then

the Voodoo5 will not give you a performance boost over a Voodoo3

card. This goes for AMD K6-2 and K6-3 systems as well. I am a bit disappointed

with 3dfx for their minimum system requirements listed on the V5 5500

box. It states that all you need is a Pentium-1 90MHz machine.

This is an absurd claim. No video card can speed up a P90 to the point

where it will run Quake III Arena. The main reason that anyone would still

be using a P90 is a lack of money, so why would 3dfx even try to sell them

a $300 video card? If you have a low-end system (anything below a

PIII or Athlon), use your money to get a low-cost GeForce-1 SDRAM card, and use

the 5.22 detonator drivers to overclock it. I do not even recommend

V5 cards for Pentium II owners. If you can't upgrade to a processor

with either SSE extensions or 3D Now!, running at 500MHz or higher, then

you will benefit from a GeForce card much more than a V5 card.

But if speed is your need, GeForce 2

cards will deliver even more... usually at a higher price. GeForce-2 cards

will give you another 5% to 20% performance boost over GeForce-1 cards,

depending on your system and the game. Some games don't run any faster on

them though, so keep that in mind. Also keep in mind that older

games will not run particularly fast on a GeForce card in a low end

system, since they can't make use of the GeForce texture and lighting

engine. So don't get a GeForce card if you're trying to speed up

older games in a low end system. GeForce cards are for speeding up

newer 3D games, like Quake III and Unreal Tournament.

I

expect that the V5 6000 cards will be noticeably faster than even the

fastest GeForce 2 cards, but the $600 price tag will be prohibitive for

most people. If 3dfx drops the price on the V5 6000 to $499 or less, they

will sell much better. Considering that 64MB DDR GeForce2 cards can exceed

$400, I suppose there is room for a faster card in the $500 to $600 range.

Prices always drop after the initial spike in sales that comes with a

product debut. So by mid to late Summer, prices should be coming down on

V5 products.

After a good long look at the V5 5500, I can

say 3dfx has a solid, good performing board with lots of nice features and

great image quality. When the cinematic effects are implemented in

future games, Voodoo5 cards will offer even more eye candy for 3dfx fans.

Despite the caveats listed in the Cons section below, I give the V5 5500 a

88% rating. (At $250, it would have gotten a 90%).

Pros:

- Rock solid

- Great full-scene anti-aliasing

- Fast if paired with a fast system

- Nice cinematic effects (in future

games)

- Good drivers

- 32 bit color

- Large texture support

- DVD assist

- Plays all 3 game types (D3D, OpenGL and

Glide)

|

Cons:

- High Price (it will come down)

- Not as fast as a GeForce 2 card

- Not for Celeron-1 or older system owners

- Minimum system requirements: PIII or

Athlon

- Full sized card means it won't fit in

all cases

- No overclock features built-into the drivers

- Level of Detail slider not enabled yet:

some blurring of text with anti-aliasing on.

Rating: 4.4

out of 5 smiley faces (88%)

:) :) :) :) +

Availability: Good

|

Copyright June19, 2000 KickAss Gear |